Best Big Data Courses on Udemy

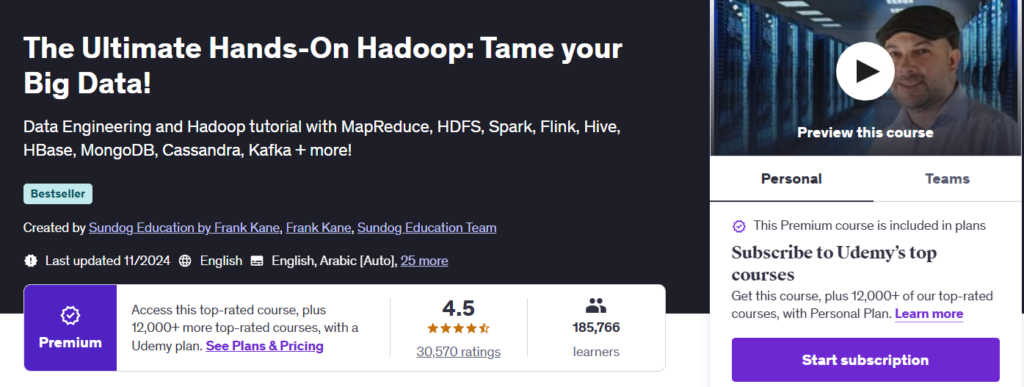

The Ultimate Hands-On Hadoop: Tame your Big Data!

Learn and master the most popular data engineering technologies in this comprehensive course, taught by a former engineer and senior manager from Amazon and IMDb. We’ll go way beyond Hadoop itself, and dive into all sorts of distributed systems you may need to integrate with.

With this Hadoop tutorial, you’ll not only understand what those systems are and how they fit together – but you’ll go hands-on and learn how to use them to solve real business problems!

This course is comprehensive, covering over 25 different technologies in over 14 hours of video lectures. It’s filled with hands-on activities and exercises, so you get some real experience in using Hadoop – it’s not just theory.

You’ll find a range of activities in this course for people at every level. If you’re a project manager who just wants to learn the buzzwords, there are web UI’s for many of the activities in the course that require no programming knowledge. If you’re comfortable with command lines, we’ll show you how to work with them too. And if you’re a programmer, I’ll challenge you with writing real scripts on a Hadoop system using Scala, Pig Latin, and Python.

You’ll walk away from this course with a real, deep understanding of Hadoop and its associated distributed systems, and you can apply Hadoop to real-world problems. Plus a valuable completion certificate is waiting for you at the end!

- Install and work with a real Hadoop installation right on your desktop with Hortonworks (now part of Cloudera) and the Ambari UI

- Manage big data on a cluster with HDFS and MapReduce

- Write programs to analyze data on Hadoop with Pig and Spark

- Store and query your data with Sqoop, Hive, MySQL, HBase, Cassandra, MongoDB, Drill, Phoenix, and Presto

- Design real-world systems using the Hadoop ecosystem

- Learn how your cluster is managed with YARN, Mesos, Zookeeper, Oozie, Zeppelin, and Hue

- Handle streaming data in real time with Kafka, Flume, Spark Streaming, Flink, and Storm

What you will learn from this course:

- Design distributed systems that manage “big data” using Hadoop and related data engineering technologies.

- Use HDFS and MapReduce for storing and analyzing data at scale.

- Use Pig and Spark to create scripts to process data on a Hadoop cluster in more complex ways.

- Analyze relational data using Hive and MySQL

- Analyze non-relational data using HBase, Cassandra, and MongoDB

- Query data interactively with Drill, Phoenix, and Presto

- Choose an appropriate data storage technology for your application

- Understand how Hadoop clusters are managed by YARN, Tez, Mesos, Zookeeper, Zeppelin, Hue, and Oozie.

- Publish data to your Hadoop cluster using Kafka, Sqoop, and Flume

- Consume streaming data using Spark Streaming, Flink, and Storm

Rating: 4.5 | Course Duration:14.5 hrs | Total Articles: 9 | Downloadable resources: 9

Promo Code: UDEAFFLP12025

Data Lake Mastery: The Key to Big Data & Data Engineering

Are you ready to dive into the world of Data Lakes and transform your skills in Cloud Data Engineering?

This skill is a game-changer in data engineering and you’re making a wise move by diving into it.

This is the only course you need to master architecting and implementing a full-blown state-of-the art data lake!

This comprehensive course offers you the ultimate journey from basic concepts to mastering sophisticated data lake architectures and strategies.

Why Choose This Course?

- Complete Data Lake Guide: From setting up AWS accounts to mastering workflow orchestration, this course covers every angle of Data Lakes.

- Step-by-Step Master: Whether you’re starting from scratch or looking to deepen your expertise, this course offers a structured, step-by-step journey from beginner basics to advanced mastery in Data Lake engineering.

- State-of-the-Art Expertise: Stay on the cutting edge of Data Lake technologies and best practices, with a focus on the most recent tools and methods.

- Practical & Hands-On: Engage with real-life scenarios and hands-on AWS tasks to solidify your understanding.

- Holistic Understanding: Beyond practical skills, gain a comprehensive understanding of all critical concepts, theories, and best practices in Data Lakes, ensuring you not only know the ‘how’ but also the ‘why’ behind each aspect.

What Will You Learn?

Throughout this course, we will learn all the relevant concepts and implement everything within AWS, the most widely utilized cloud platform, ensuring practical, hands-on experience with the industry standard.

However, the knowledge and skills you acquire are designed to be universally applicable, equipping you with the expertise to operate confidently across any cloud environment.

- Foundational Concepts: Understand what Data Lakes are, their benefits, and how they differ from traditional data warehouses.

- Architecture Mastery: Dive deep into Data Lake architecture, understanding different zones, tools, and data formats.

- Data Ingestion Techniques: Master various data ingestion methods, including batch and event-driven ingestion, and learn to use AWS Glue and Kinesis.

- Storage Management: Explore key concepts of data storage management in Data Lakes, such as partitioning, lifecycle management, and versioning.

- Processing and Transformation: Learn about Hadoop, Spark, and how to optimize data processing and transformation in Data Lakes.

- Workflow Orchestration: Understand how to automate data workflows in a Data Lake environment, using retail data scenarios for practical insights.

- Advanced Analytics: Unlock the power of analytics in Data Lakes with tools like Power BI, QuickSight, and Jupyter Notebooks.

- Monitoring and Security: Learn the essentials of monitoring Data Lakes and implementing robust security measures.

What you will learn from this course:

- Master the complete implementation of full-scale Data Lake solutions in the cloud

- Apply Data Lake concepts professionally in cloud data engineering

- Create a multi-layered security strategy for Data Lake protection

- Design & implement efficient data ingestion strategies in AWS

- Master Data Lake Architecture for effective cloud implementations

- Master Data Lake Governance & Security

- Master Leadership & Strategy Essentials for Successful Data Lakes

- Learn comprehensive access control strategies within Data Lakes

- Understand and implement robust monitoring and security in Data Lakes

- Enhance your career prospects with advanced Data Lake skills and knowledge

Course Rating: 4.6 | Course Duration: 10hrs | Total Articles: 3 | Total Downloadable Resources: 24

Promo Code: UDEAFFLP12025

Spark and Python for Big Data with PySpark

This course will teach the basics with a crash course in Python, continuing on to learning how to use Spark DataFrames with the latest Spark 2.0 syntax! Once we’ve done that we’ll go through how to use the MLlib Machine Library with the DataFrame syntax and Spark. All along the way you’ll have exercises and Mock Consulting Projects that put you right into a real world situation where you need to use your new skills to solve a real problem!

We also cover the latest Spark Technologies, like Spark SQL, Spark Streaming, and advanced models like Gradient Boosted Trees! After you complete this course you will feel comfortable putting Spark and PySpark on your resume! This course also has a full 30 day money back guarantee and comes with a LinkedIn Certificate of Completion!

If you’re ready to jump into the world of Python, Spark, and Big Data, this is the course for you!

What you will learn from this coures:

- Use Python and Spark together to analyze Big Data

- Learn how to use the new Spark 2.0 DataFrame Syntax

- Work on Consulting Projects that mimic real world situations!

- Classify Customer Churn with Logisitic Regression

- Use Spark with Random Forests for Classification

- Learn how to use Spark’s Gradient Boosted Trees

- Use Spark’s MLlib to create Powerful Machine Learning Models

- Learn about the DataBricks Platform!

- Get set up on Amazon Web Services EC2 for Big Data Analysis

- Learn how to use AWS Elastic MapReduce Service!

- Learn how to leverage the power of Linux with a Spark Environment!

- Create a Spam filter using Spark and Natural Language Processing!

- Use Spark Streaming to Analyze Tweets in Real Time!

Rating: 4.5 | Course Duration: 10.5hrs | Total Articles: 4 | Downloadable Resources: 4

Promo Code: UDEAFFLP12025

Taming Big Data with Apache Spark and Python – Hands On!

Learn and master the art of framing data analysis problems as Spark problems through over 20 hands-on examples, and then scale them up to run on cloud computing services in this course. You’ll be learning from an ex-engineer and senior manager from Amazon and IMDb.

- Learn the concepts of Spark’s DataFrames and Resilient Distributed Datastores

- Develop and run Spark jobs quickly using Python and pyspark

- Translate complex analysis problems into iterative or multi-stage Spark scripts

- Scale up to larger data sets using Amazon’s Elastic MapReduce service

- Understand how Hadoop YARN distributes Spark across computing clusters

- Learn about other Spark technologies, like Spark SQL, Spark Streaming, and GraphX

By the end of this course, you’ll be running code that analyzes gigabytes worth of information – in the cloud – in a matter of minutes.

This course uses the familiar Python programming language; if you’d rather use Scala to get the best performance out of Spark, see my “Apache Spark with Scala – Hands On with Big Data” course instead.

You’ll get warmed up with some simple examples of using Spark to analyze movie ratings data and text in a book. Once you’ve got the basics under your belt, we’ll move to some more complex and interesting tasks. We’ll use a million movie ratings to find movies that are similar to each other, and you might even discover some new movies you might like in the process! We’ll analyze a social graph of superheroes, and learn who the most “popular” superhero is – and develop a system to find “degrees of separation” between superheroes. Are all Marvel superheroes within a few degrees of being connected to The Incredible Hulk? You’ll find the answer.

This course is very hands-on; you’ll spend most of your time following along with the instructor as we write, analyze, and run real code together – both on your own system, and in the cloud using Amazon’s Elastic MapReduce service. 7 hours of video content is included, with over 20 real examples of increasing complexity you can build, run and study yourself. Move through them at your own pace, on your own schedule. The course wraps up with an overview of other Spark-based technologies, including Spark SQL, Spark Streaming, and GraphX.

What you will learn from this course:

- Use DataFrames and Structured Streaming in Spark 3

- Use the MLLib machine learning library to answer common data mining questions

- Understand how Spark Streaming lets your process continuous streams of data in real time

- Frame big data analysis problems as Spark problems

- Use Amazon’s Elastic MapReduce service to run your job on a cluster with Hadoop YARN

- Install and run Apache Spark on a desktop computer or on a cluster

- Use Spark’s Resilient Distributed Datasets to process and analyze large data sets across many CPU’s

- Implement iterative algorithms such as breadth-first-search using Spark

- Understand how Spark SQL lets you work with structured data

- Tune and troubleshoot large jobs running on a cluster

- Share information between nodes on a Spark cluster using broadcast variables and accumulators

- Understand how the GraphX library helps with network analysis problems

Rating: 4.5 | Course Duration: 7 | Total Articles: 3 | Downloadable Resources: 25

Promo Code: UDEAFFLP12025

Learn Big Data: The Hadoop Ecosystem Masterclass

In this course you will learn Big Data using the Hadoop Ecosystem. Why Hadoop? It is one of the most sought after skills in the IT industry.

The course is aimed at Software Engineers, Database Administrators, and System Administrators that want to learn about Big Data. Other IT professionals can also take this course, but might have to do some extra research to understand some of the concepts.

You will learn how to use the most popular software in the Big Data industry at moment, using batch processing as well as realtime processing. This course will give you enough background to be able to talk about real problems and solutions with experts in the industry. Updating your LinkedIn profile with these technologies will make recruiters want you to get interviews at the most prestigious companies in the world.

The course is very practical, with more than 6 hours of lectures. You want to try out everything yourself, adding multiple hours of learning. If you get stuck with the technology while trying, there is support available. I will answer your messages on the message boards and we have a Facebook group where you can post questions.

- This course is for anyone that wants to know how Big Data works, and what technologies are involved

- The main focus is on the Hadoop ecosystem. We don’t cover any technologies not on the Hortonworks Data Platform Stack

- The course compares MapR, Cloudera, and Hortonworks, but we only use the Hortonworks Data Platform (HDP) in the demos

Rating: 4.5 | Course Duration: 6hrs | Total Articles: 1 | Downloadable resources: 1

Promo Code: UDEAFFLP12025